Exploring the ‘data elite’ company and what solutions they have to offer

on Unsplash*](https://cdn-images-1.medium.com/max/3200/0*ON9hm-DYPj1g5XYk)

Breaking (Data)brick By Brick!

Founded in 2013 by the real OGs… the creators of Apache Spark, Delta Lake, and MLflow, Databricks is a single platform for all your data needs. It is a software (Data + AI) company that offers a Unified Data Analytics Platform (UDAP) and is basically built on a modern Lakehouse architecture in the cloud.

At present, Databricks is one of the fastest-growing data services on AWS and Azure with its headquarters in San Francisco and offices around the world serving over 5000 customers and over 450 partners worldwide. The company has recently hit a $28 billion valuation thereby setting in stone the company’s vision and mission of democratizing and simplifying Data and AI to help data teams resolve any and every problem.

The tricks behind Databricks

The current version of Databricks 7.3 LTS operates over Apache 3.0.1 and supports a host of analytical capabilities that can work towards enhancing the outcome of your Data Pipeline. Leveraging Apache Spark for computational capabilities, Databricks supports several programming languages like R, Python, Scala, and SparkSQL/SQL for preparing code, making it imperative for the coders to have a grasp of the languages in order to optimally utilize the capabilities of Databricks platform.

But before I delve deeper, let me give you a brief idea about Apache Spark (an obvious prerequisite).

Apache Spark is an open-source, blazing-fast cluster computing technology, designed for, well, faster calculations. Used for huge data workloads, Spark is a distributed processing system with its main features being ‘optimized query execution’ and ‘in-memory caching’ both aiding in increasing the processing speed of the application. Apache Spark achieves this high performance for both batch and streaming data using a state-of-the-art DAG scheduler.

Spark offers over 80 high-level operators to effortlessly build parallel apps. You can even use it interactively from the Scala, Python, R, and SQL shells. Spark powers a stack of libraries and you can combine SQL, streaming, and complex analytic seamlessly.

Why pick Databricks?

The best of data warehouses meet the best of data lakes, offering an open and unified platform for data and AI.

To put it simply in the words of the company, Databricks is:

A single place for all your data

A base foundation for every workload — from BI to AI

A single platform that runs everywhere

A single platform that brings everything together — Lakehouse

Salient Features of Databricks Platform:

Collaborative Notebooks — allowing DE and DS to work together

Reliable Data Engineering

Production Machine Learning

SQL Analytics on all your data

What are the key constituents of Databricks?

Notebook: It is a web-based interface to a document that contains runnable (executable) codes and commands, visualizations, and narrative text.

Dashboard: It is an interface that provides organized access to visualizations.

Library: It is a package of codes available to the notebook or job running on your cluster. Databricks runtimes consist of many libraries with an option of adding your own.

Experiment: It is a collection of MLflow that runs for training a machine learning model.

As compared to AWS, GCP, and AZURE, Databricks positions itself as a unified data platform.

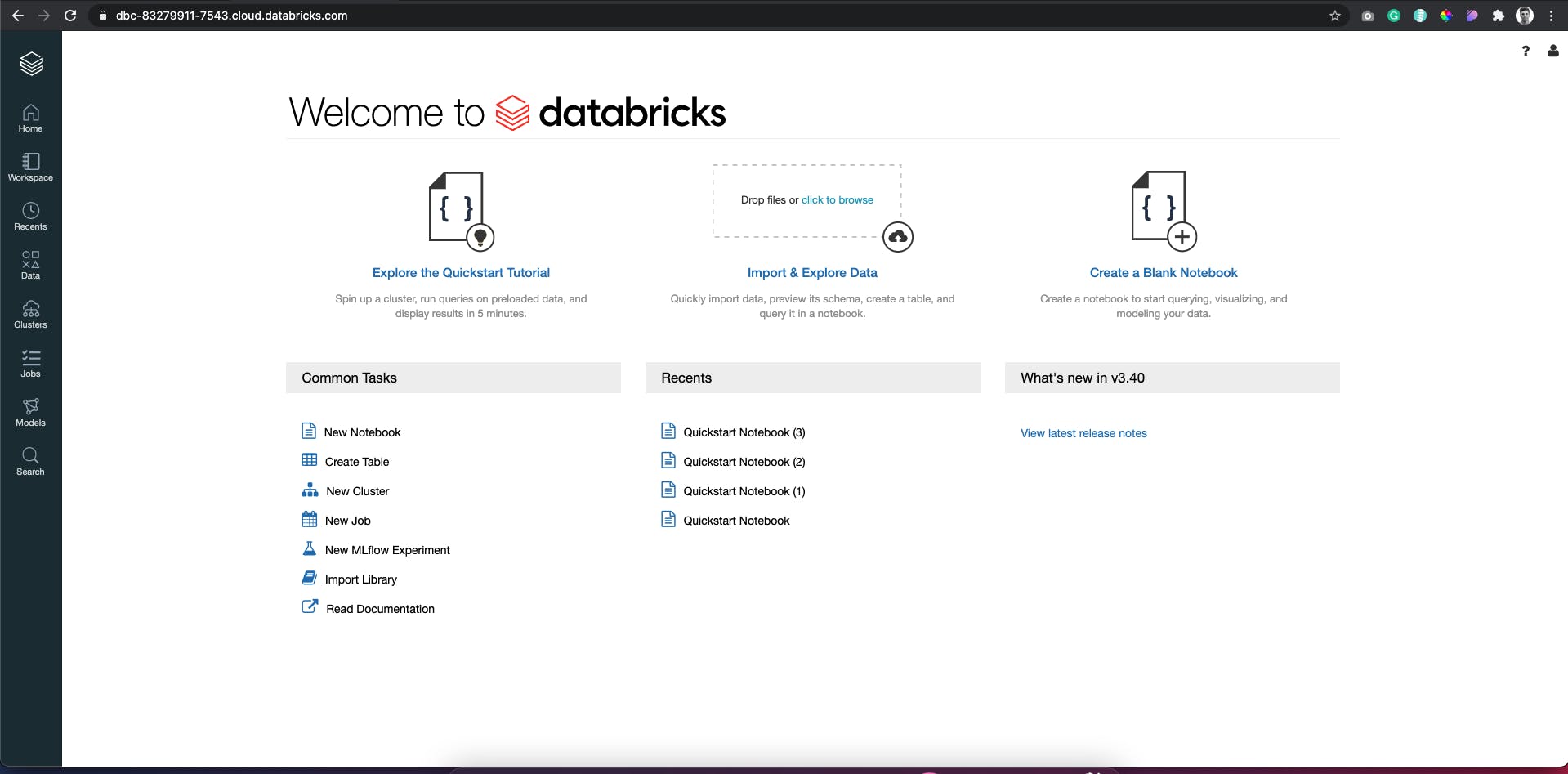

A Sneak Peek: Unified Data Analytics Platform

Screenshot of my databricks instance

Screenshot of my databricks instance

UDAP can be broadly divided into:

Data Science Workspace: The Workspace provides a physical location for collaborative working to your Data Science team, right from data ingestion to data analysis. Depending on the data practitioner’s task or roles assigned, the team can employ different functionalities.

UD Service: Let’s just say it is the powerhouse fueling the work data practitioner’s performance in the Data Science Workspace. Right from Databricks Runtime to Delta Lakes to Databricks ingest, it takes care of everything.

Enterprise Cloud Service: From maintaining end-to-end security to ensuring production-ready infrastructure, it enables organizations to not only set up but also secure, manage, and scale their platform.

Unified Data Analytics (UDA) combines data processing with AI technologies offering better, meaningful insights and solutions from the data made available.

Getting started with databricks? Want to know more?

My two cents on Databricks

While some might consider entirely AI-driven businesses to be farfetched, one cannot deny the fact that the future really arrives sooner than most people can imagine it. Companies across the world are already adopting and applying innovative and over 80 distinct uses for Databricks’ tools in operation for better performances, giving them an edge over their competitors. With over a hundred global partners such as Microsoft, Amazon, Tableau, Informatica, Cap Gemini, and Booz Allen Hamilton, to name but a few, standing as a testament to the fact that Data and AI-driven businesses are the future, all I can say is Databricks is a platform to watch out for!