Creating a Machine Learning Model with SQL

Build an ML model using SQL on Google Big Query

Even though Machine Learning is already really advanced, however, it has some weaknesses which can make it hard for you to use it.

Current machine learning workflows and its problems

- If you've worked with ml models you may realize that structure and preparing them can be extremely time-escalated.

- For a typical information researcher should initially trade limited quantities of information from information store into I-Python note pad and into information taking care of structures like pandas for python.

- In case you're assembling a custom model you first need to change and pre-process all that information and play out all that component designing before you can even take care of the model information in.

- Then, at that point, at long last after you've fabricated your model and say TensorFlow another comparable library then you train it locally on your PC or on a VM doing that with a little model then, at that point, expects you to return and make all the more new information includes and further develop execution and you rehash and rehash and rehash furthermore, it's hard so you stop after a couple of cycles

But hey there, don’t worry! you can work on building models, even if you are not the data scientist of your team.

Introducing to Google BigQuery

Photo by Patrick Lindenberg on Unsplash

BigQuery is a fully-managed petabyte-scale enterprise data warehouse. It is basically made up of two key things

- Fast SQL query engine

- Fully managed data storage

Big Query supports querying petabytes of data with standard SQL that everyone is used to.

Example:

#standardSQL

SELECT

COUNT(*) AS total_trips

FROM

`bigquery-public-data.san_francisco_bikeshare.bikeshare_trips`

Other Big Query Key features

- Serverless

- Flexible pricing model

- Standard GCP Data encryption and security

- Perfect for BI and AI use cases

- ML and predictive modeling with BigQuery ML

- Really cheap storage - same as Google cloud storage buckets

- Interactive data analysis with BigQuery BI Engine - connect to tableau, data studio, looker, etc

Big query and its ml features

As a part of one of the main features, big query allows building predictive machine learning models with just simple SQL syntax. With the petabyte processing power of google cloud, you can easily create models right there in the warehouse. A sample syntax to create models looks like this

CREATE OR REPLACE MODEL `dataset.classification_model`

OPTIONS

(

model_type='logistic_reg',

labels = ['y']

)

AS

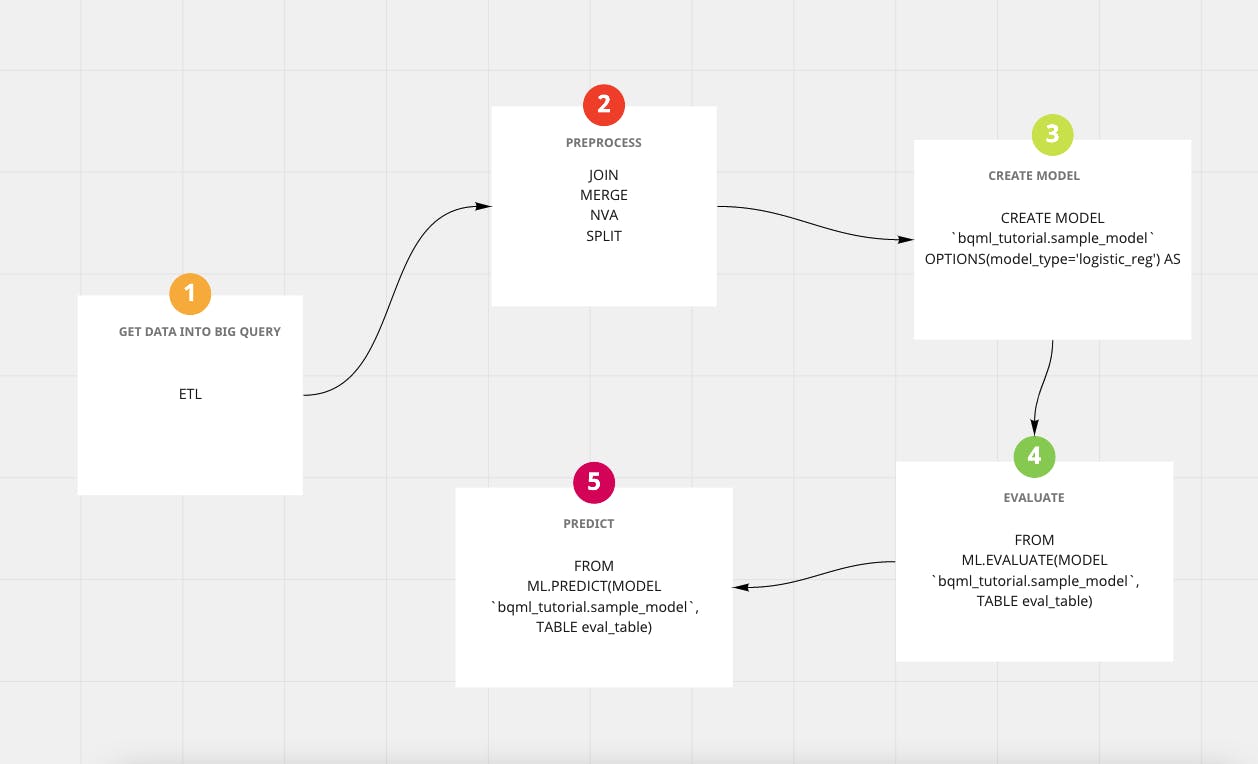

A typical workflow using Big Query ML [5 Step]

Flowchart diagram from the author

Tutorial: Building a classification model with big query ML (Simple SQL Syntax)

You can open up a big query console and start replicating the steps below:

OR

You can watch a Video Tutorial I created

Dataset

I am using a public big query dataset google_analytics_sample . The dataset provides 12 months (August 2016 to August 2017) of obfuscated Google Analytics 360 data from the Google Merchandise Store. The data includes The data is typical of what an ecommerce website would see and includes the following information:

Traffic source data: information about where website visitors originate, including data about organic traffic, paid search traffic, and display traffic Content data: information about the behavior of users on the site, such as URLs of pages that visitors look at, how they interact with content, etc. Transactional data: information about the transactions on the Google Merchandise Store website.

Dataset license: A public dataset is any dataset that is stored in BigQuery and made available to the general public through the Google Cloud Public Dataset Program. The public datasets are datasets that BigQuery hosts for you to access and integrate into your applications. Google pays for the storage of these datasets and provides public access to the data via a project. You pay only for the queries that you perform on the data. The first 1 TB per month is free, subject to query pricing details. Under Creative Commons Attribution 4.0 License

Machine Learning problem we will try to solve

We will try to predict if the user will buy products on return visit, hence we name our label will_buy_on_return_visit

Step1: Exploring the dataset

- Checking conversion rate

WITH visitors AS(

SELECT

COUNT(DISTINCT fullVisitorId) AS total_visitors

FROM `bigquery-public-data.google_analytics_sample.ga_sessions_20170801`

),

purchasers AS(

SELECT

COUNT(DISTINCT fullVisitorId) AS total_purchasers

FROM `bigquery-public-data.google_analytics_sample.ga_sessions_20170801`

WHERE totals.transactions IS NOT NULL

)

SELECT

total_visitors,

total_purchasers,

total_purchasers / total_visitors AS conversion_rate

FROM visitors, purchasers

- What are the top 5 selling products?

SELECT

p.v2ProductName,

p.v2ProductCategory,

SUM(p.productQuantity) AS units_sold,

ROUND(SUM(p.localProductRevenue/1000000),2) AS revenue

FROM `bigquery-public-data.google_analytics_sample.ga_sessions_20170801`,

UNNEST(hits) AS h,

UNNEST(h.product) AS p

GROUP BY 1, 2

ORDER BY revenue DESC

LIMIT 5;

- Question: How many visitors bought on subsequent visits to the website?

WITH all_visitor_stats AS (

SELECT

fullvisitorid, # 741,721 unique visitors

IF(COUNTIF(totals.transactions > 0 AND totals.newVisits IS NULL) > 0, 1, 0) AS will_buy_on_return_visit

FROM `bigquery-public-data.google_analytics_sample.ga_sessions_20170801`

GROUP BY fullvisitorid

)

SELECT

COUNT(DISTINCT fullvisitorid) AS total_visitors,

will_buy_on_return_visit

FROM all_visitor_stats

GROUP BY will_buy_on_return_visit

Step 2. Select features and create your training dataset

Now that we know a bit more about the data, let's finalize and create a final dataset we want to use for training

SELECT

* EXCEPT(fullVisitorId)

FROM

# features

(SELECT

fullVisitorId,

IFNULL(totals.bounces, 0) AS bounces,

IFNULL(totals.timeOnSite, 0) AS time_on_site

FROM

`bigquery-public-data.google_analytics_sample.ga_sessions_20170801`

WHERE

totals.newVisits = 1)

JOIN

(SELECT

fullvisitorid,

IF(COUNTIF(totals.transactions > 0 AND totals.newVisits IS NULL) > 0, 1, 0) AS will_buy_on_return_visit

FROM

`bigquery-public-data.google_analytics_sample.ga_sessions_20170801`

GROUP BY fullvisitorid)

USING (fullVisitorId)

ORDER BY time_on_site DESC

LIMIT 10;

Step 3: Create a Model

This step uses a create model statement over the dataset created in previous step

CREATE OR REPLACE MODEL `ecommerce.classification_model`

OPTIONS

(

model_type='logistic_reg',

labels = ['will_buy_on_return_visit']

)

AS

#standardSQL

SELECT

* EXCEPT(fullVisitorId)

FROM

# features

(SELECT

fullVisitorId,

IFNULL(totals.bounces, 0) AS bounces,

IFNULL(totals.timeOnSite, 0) AS time_on_site

FROM

`bigquery-public-data.google_analytics_sample.ga_sessions_20170801`

WHERE

totals.newVisits = 1)

JOIN

(SELECT

fullvisitorid,

IF(COUNTIF(totals.transactions > 0 AND totals.newVisits IS NULL) > 0, 1, 0) AS will_buy_on_return_visit

FROM

`bigquery-public-data.google_analytics_sample.ga_sessions_20170801`

GROUP BY fullvisitorid)

USING (fullVisitorId)

;

Step 4: Evaluate classification model performance

Evaluate the performance of the model you just created using SQL

SELECT

roc_auc,

CASE

WHEN roc_auc > .9 THEN 'good'

WHEN roc_auc > .8 THEN 'fair'

WHEN roc_auc > .7 THEN 'not great'

ELSE 'poor' END AS model_quality

FROM

ML.EVALUATE(MODEL ecommerce.classification_model, (

SELECT

* EXCEPT(fullVisitorId)

FROM

# features

(SELECT

fullVisitorId,

IFNULL(totals.bounces, 0) AS bounces,

IFNULL(totals.timeOnSite, 0) AS time_on_site

FROM

`bigquery-public-data.google_analytics_sample.ga_sessions_20170801`

WHERE

totals.newVisits = 1

AND date BETWEEN '20170501' AND '20170630') # eval on 2 months

JOIN

(SELECT

fullvisitorid,

IF(COUNTIF(totals.transactions > 0 AND totals.newVisits IS NULL) > 0, 1, 0) AS will_buy_on_return_visit

FROM

`bigquery-public-data.google_analytics_sample.ga_sessions_20170801`

GROUP BY fullvisitorid)

USING (fullVisitorId)

));

Future steps and feature engineering

If you are further interested in improving the performance of the model, you can always opt-in for adding in more features from the dataset

Conclusion

Products like Google's Big Query ML make building machine learning models accessible to more people. With simple SQL syntax, and googles processing power it is really easy to churn out real-life big data models.